Stability AI recently introduced its latest innovation, Stable Diffusion 3 (SD3), a state-of-the-art image-synthesis model designed to rival the capabilities of industry giants like DALL-E. This new model follows in the footsteps of its predecessors, boasting enhanced capabilities in generating detailed images based on textual prompts. While a public demonstration was not provided alongside the announcement, Stability AI has opened a waitlist for individuals eager to explore the potential of SD3.

According to Stability AI, the Stable Diffusion 3 family encompasses models ranging from 800 million to 8 billion parameters, catering to a wide range of devices from smartphones to servers. This scalability ensures that users can deploy the model locally while achieving the desired level of detail and quality in image generation. However, larger models may require additional VRAM on GPU accelerators to function optimally.

Stability AI has been making waves in the field of AI image generation since 2022, with a series of successful releases including Stable Diffusion 1.4, 1.5, 2.0, 2.1, XL, and XL Turbo. Positioned as an open alternative to proprietary models like DALL-E, Stability AI’s models have garnered attention despite facing scrutiny over copyright issues and bias concerns. Notably, Stable Diffusion models offer users the flexibility to customize outputs, thanks to their open-source nature.

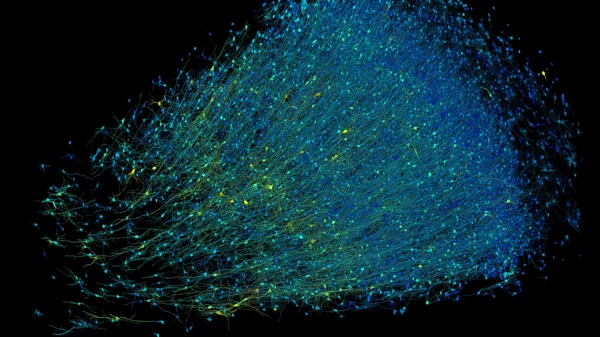

CEO Emad Mostaque highlighted some of the technical advancements in SD3, including a new diffusion transformer architecture inspired by Sora, combined with flow matching and other improvements. This innovative approach leverages transformer technology to scale efficiently and produce high-quality images with multimodal inputs.

SD3 adopts a novel diffusion transformer architecture, departing from traditional image-building blocks in favor of transformer-inspired techniques. By breaking down image creation into smaller segments, SD3 enhances scalability and image quality, as evidenced by samples available on Stability’s website.

Furthermore, SD3 incorporates “flow matching,” a technique that facilitates smooth transitions from random noise to structured images without the need for exhaustive simulation. This approach focuses on the overall direction of image creation, improving efficiency and output quality.

While SD3’s widespread availability is pending, Stability AI has assured users that once testing is complete, the model will be freely accessible for local deployment. This preview phase is crucial for gathering insights to enhance performance and safety before a full release.

In addition to SD3, Stability AI continues to explore various image-synthesis architectures, with recent announcements including SDXL, SDXL Turbo, and Stable Cascade, a three-stage text-to-image synthesis process. These developments underscore Stability AI’s commitment to pushing the boundaries of AI-driven image synthesis technology.