A team of researchers at Stanford University is pioneering a groundbreaking AI-assisted holographic imaging technology that promises to revolutionize augmented reality (AR) headsets. This innovative technology is touted to be thinner, lighter, and higher in quality compared to existing solutions.

Currently, the lab prototype has a limited field of view, measuring just 11.7 degrees in the lab. However, Stanford’s Computational Imaging Lab has showcased compelling visual demonstrations indicating its potential: a sleeker stack of holographic components that could feasibly integrate into standard glasses frames, capable of projecting lifelike, full-color, dynamic 3D images at varying depths.

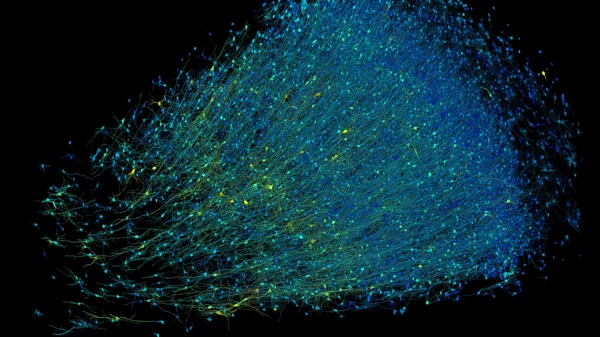

These AR glasses employ waveguides to direct light through the lenses and into the wearer’s eyes. What sets them apart is the development of a unique “nanophotonic metasurface waveguide” that eliminates the need for bulky collimation optics, along with a “learned physical waveguide model” leveraging AI algorithms to significantly enhance image quality. The study highlights that these models are automatically calibrated using camera feedback.

While the Stanford technology is currently in the prototype stage, with functional models attached to a bench and 3D-printed frames, researchers aim to disrupt the spatial computing market dominated by bulky passthrough mixed reality headsets like Apple’s Vision Pro and Meta’s Quest 3.

Gun-Yeal Lee, a postdoctoral researcher involved in the project and co-author of the paper published in Nature, asserts that no other AR system matches the combination of capability and compactness offered by their technology.

Tech giants like Meta have invested heavily in AR glasses technology, striving to create a “holy grail” product resembling regular eyewear. Although Meta’s current Ray-Bans lack an onboard display, leaked hardware roadmaps suggest a target date of 2027 for Meta’s first true AR glasses.